@Mumbly said:

The sarcastic answer he got as a response to his previous post just added to the fuel so there's that.

Naturally, the host is frustrated because of lack of the response from the DC, but the same frustration bear clients with weeks-long outages and some updates here and there even if there's not much to say just so that they know that they aren't forgotten could help to the situation.

Personally I do agree that it there might be a couple of less tickets submitted if VirMach dropped by here with a quick status update a little more often. Although I don't expect saying that you have nothing to add to the status page comments will do that much good. VirMach does seem update us when he has something tangible to say.

1st comment by AlSwearengen maybe was out of frustration, and received a sarcastic answer from VirMach, second comment by AlSwearengen was a bit of an escalation, so I stepped in before in got out of hand. That is the job as I see it.

I second thing that also influenced me was that AlSwearengen has never made a comment related to VirMach before, and has not stated he is a customer of VirMach's that is affected by any of these outages. If he is a VirMach customer then I can understand his frustration, but still can not condone the tone.

This is not to say that it would have been one sided if I had not said something. I am sure that VirMach is more than able to provide many snarky/sarcastic comments of his own, and might even enjoy doing so. But where would that get us besides a shit show, and a Rant thread.

The issue isn't the frequency of the updates, it's the lack of progress and the lack of details. This isn't acceptable, AT ALL.

You picked the wrong providers if you can't get responses in less than a week. You need new leadership that can get shit done in a reasonable timeframe. This is mind boggling, really, your attitude with so many down customers. You're in the wrong industry.

@FrankZ said: I am sure that VirMach is more than able to provide many snarky/sarcastic comments of his own, and might even enjoy doing so. But where would that get us besides a shit show, and a Rant thread.

Hey I'm just following the instructions in the thread title. I can gauge when a serious response is necessary and this isn't one of those situations. The guy is just here to try to win a philosophical debate and anything I say won't change that.

@AlSwearengen said: You picked the wrong providers if you can't get responses in less than a week. You need new leadership that can get shit done in a reasonable timeframe. This is mind boggling, really, your attitude with so many down customers. You're in the wrong industry.

You're right we should have picked another provider and then got them to infiltrate Flexential datacenters in an operation to get the servers back since the servers are still within Flexential providers. Since we're in the wrong industry, using your logic it sounds like everyone picked the wrong provider (us.)

@Mumbly said: just so that they know that they aren't forgotten could help to the situation.

Even coming at this from any angle, let's say we're evil, in what situation would we just "forget" we have like tens of thousands of dollars of equipment with Flexential? We already said equipment is destined for a datacenter in Atlanta, so at the very least if we assume someone trusts any of our updates and that means even if we are going to nuke everyone's data afterward or don't plan on bringing services online, that'd happen once it's redeployed. We haven't even got to that point. I don't see any reason to provide anything falsely reassuring. I could keep repeating that we've contacted Flexential and they said everything is great, over and over. Then it'd just be unhelpful and also annoying. For all we know, Flexential does plan on just taking the equipment or whatever and it's fully out of our control in this scenario.

If you check back in the history of comments here and status in their locations of any changes at all, Flexential was pretty much useless before all of these issues. It's why I migrated services away from them a while back and why Virmach was trying to find alternate provider in the areas long before these issues.

So I have no doubt that they are completely useless at this point when they probably have a bunch of extra people contacting them and expecting them to actually do something.

While I know people are upset at their services not working in those spots, just imagine how much more upset Virmach is having massive amounts of hardware they've bought being in Flexential hands and not knowing if they will get them back on top of having angry customers demanding updates they simply don't have.

No one is happy in the situation, but can we try to at least be civil guys?

@AlSwearengen said: You picked the wrong providers if you can't get responses in less than a week. You need new leadership that can get shit done in a reasonable timeframe. This is mind boggling, really, your attitude with so many down customers. You're in the wrong industry.

You're right we should have picked another provider and then got them to infiltrate Flexential datacenters in an operation to get the servers back since the servers are still within Flexential providers. Since we're in the wrong industry, using your logic it sounds like everyone picked the wrong provider (us.)

I have no idea what you're referring to. Your Seattle update said hardware was shipped to LA from Seattle (after the waste of time fail to get setup in Seattle) and just waiting on "migrations". For 11 days. It's apparently not hardware that can just get powered on and IPs assigned (as inferred before) but even data transfer would be done by now.

Edit: what philosophical debate would that be? That service providers should take downtime seriously? Or that customers expect better than week plus updates where nothing happened? I'm not clear what gaslighting me serves your purpose here.

Yes, everyone picked the wrong provider. But you seem to be helping users by having renewals come and go without usable servers and forced to go elsewhere regardless.

Over the eight years or so that I have been a VirMach customer I've been pretty happy with VirMach overall. I expect that the really good service I have had in the past, and their giveaway prices has prompted me to be a bit more calm about the current situation. That, and the fact that 19 of my 20 VMs are working just fine.

Although I am looking to replace a Dallas VM that was moved to NYC. Do you know of any non ColoCrossing provider where I might move to ?

The specs are:

Four Ryzen 5950x cores (or one dedicated core), 4GB RAM, 130GB NVMe, 20TB transfer, 3 IPv4, $20 a year.

If I don't find a similar deal in Dallas I probably will just wait for Virmach to open up OKC and move there.

@AlSwearengen said: I have no idea what you're referring to. Your Seattle update said hardware was shipped to LA from Seattle (after the waste of time fail to get setup in Seattle) and just waiting on "migrations". For 11 days. It's apparently not hardware that can just get powered on and IPs assigned (as inferred before) but even data transfer would be done by now.

Okay, sure, let's go with this since you seem personally interested and believe we didn't provide enough details or frequency in updates. I've recreated the status update for you as it would look if I had done it the way you desired, which I personally think would have been unhelpful. Keep in mind I've kind of ballparked the times here but all of these things did happen, and I may be forgetting a few extra things here and there which would have only made it more complicated. All this would do is give people little things they could pick apart, get confused over, or whatever else, and would not actually help customers figure out when something would be back up or pretty much anything else unless it were even more detailed.

Original:

Seattle service deployments will be tracked here.

Currently, we are still waiting for deployment in Los Angeles and loaded from backups

Seattle has released equipment and they will soon be en route to San Jose

Update 9/28 -- These are being loaded into new Los Angeles servers now.

Please note, if you have a currently open ticket regarding a Seattle server, your migration may be paused until we can read your ticket first.

Update 10/02 -- We've ran into issues and some delays which mean a portion of these changes have not yet fully completed. It's possible networking or other issues exist and we are working on resolving them. SEAZ009 and SEAZ010 are still awaiting migration.

Update 10/13 -- We're working on moving the final two servers and fixing any remaining network issues.

Custom version, made just for you:

Seattle service deployments will be tracked here.

Currently, we are still waiting for deployment in Los Angeles and loaded from backups

Seattle has released equipment and they will soon be en route to San Jose

Update: Seattle has misunderstood our request, and even though labels were sent, they were not properly forwarded internally.

Update: There's an issue with server deployments in Los Angeles as the power drop was communicated incorrectly internally within the datacenter staff, and PDU was not deployed as originally communicated to us.

Update: Seattle has received labels again, but they are asking us again for specific equipment information, even though they previously stated that it was found.

Update: Server which hasn't been named yet in cabinet which hasn't been named yet is having issues that haven't been determined yet.

Update: Issue has been determined, it was an issue with BMC, we apologize for not going into further detail due to time constraints in making this as a reply to a single person on this one but rest assured someone in the future has already fixed it.

Update: Turns out that the network drop wasn't working, we left the facility after deploying everything but waiting on networking to get back to us.

Update: Some questions were asked and answered, we're waiting for networking to complete this task.

Update: Networking team at the datacenter has brought the switch online.

Update: We are correcting some issues with LOA, the IRR wasn't ready at the time originally, but now is available, so we're making sure announcement is corrected.

Update 9/28 -- These are being loaded into new Los Angeles servers now. Please remember that these are being loaded in from backups, and we are not speaking of the original servers destined for San Jose.

Update -- Backup server is being overloaded by some abuse, it's been handled throughout this time but delayed backup loading slightly, once again we apologize for not describing each instance of abuse specifically, but at least one of them had to do with a particular set of operations that were causing stress on that particular portion of the disk(s) for that particular backup and a specific abuser who was using their service as a fileserver. We were able to identify this with various tools, and determined it did not fall in line with our AUP in several ways before suspending the customer's service. About 6 others have been powered off and are being assisted within their respective tickets.

Update -- LAX1??? is having a particular issue with SolusVM setup, for some reason there is a specific desync between SolusVM being set up on new slaves versus an older slave having received updates throughout the years.

Update -- LAX1??? is having issues with one disk not showing up, likely during the bumpy car ride. We apologize for not using a vehicle with smoother suspension to move these servers. We're having them take a look.

Update -- LAX1??? has been resolved.

Update -- Please remember that these backups are being loaded in from backup servers that use hard drives, and as a result it's takin a while for them to complete.

Update -- We are allocating IP blocks to these nodes to get them ready.

Update -- There's been a complication related to node TPAZ005 and NYCB027. The server that one of these was slated to go to is having issues, which once again we cannot get into as this is all simulated for a single comment to a single person but rest assured it will take a very long time and create a backlog of other issues, enjoy.

Update -- There's been a second complicated related to nodes mainly in Hivelocity, they're not doing well with one particular update that's causing a particular issue with SolusVM and delaying the other migration, we are going to spend the next 2 days identifying this while simultaneously doing everything else and updating everything else, we'll create another network status page for this even though it's not affecting customers directly, oh wait we did, but we should have probably also provided more updates there as well and created a third for the dynamic between this status update and that one.

Update -- Some of this is being handled by someone else within our team, I am not going to ask him to create simulated updates for the portion he did but they would be inserted here.

Update -- TPAZ005 had to be partially loaded in due to the original node it was going to having issues and needed to be split off, since we're also using other nodes for other things we're tryin to locate a suitable node for the rest.

Update -- A suitable node for the rest has not yet been located, we apologize for the wait.

Update -- LAX1???? has been loaded in but is having network bridge issues. We do not have time to work on this right now but we'll keep updating you on when we begin work on this.

Update -- A suitable node has not been found yet, we apologize for the wait. The network bridge issue has not been fixed yet, we apologize for the wait.

Update -- TPAZ005 nodes have been backed up into another node, this is part of another previous issue and unrelated to this one but we just wanted to let you know we had to spend time on that.

Update -- We've had to step away from this for a moment as we're also doing several other major things. We apologize for the delay.

Please note, if you have a currently open ticket regarding a Seattle server, your migration may be paused until we can read your ticket first. This is due to people making custom requests in these tickets so we have to read through them an determine if they need other work done so we don't end up loading them in several times.

Update 10/02 -- We've ran into issues and some delays which mean a portion of these changes have not yet fully completed. It's possible networking or other issues exist and we are working on resolving them. SEAZ009 and SEAZ010 are still awaiting migration.

These issues include the previously mentioned issues, as well as a few new issues listed below.

Due to some modifications that had to be made, these didn't end up exactly where they needed to be and we have to shift some of them around for connectivity.

Update -- Oh wait before I can list the rest of those, new update for Seattle to San Jose, they were asking us for another label because they wanted to put the power cables and such into their own box. We provided them with this label.

Update -- Seattle has confirmed these are ready for pickup the next day after a few more emails, UPS did not pick up the servers.

Update -- Seattle, UPS has not picked this up again. We contacted them to ask thm if we should be putting any particular information for UPS to pick them up.

Update -- Seattle DC has not confirmed with us if we should change the pickup requests, we will make other pickup requests.

Update -- Another UPS pickup request has been made.

Update -- Another UPS pickup request has been made.

Update -- Some backups have been loaded in, we're working on fixing remaining issues.

Update -- LAX1??? has been having problems with SolusVM API and Ip changes have been delayed while we look into that issue.

Update - TPAZ005 backups have been completed.

Update -- LAX1???? had an issue related to the other issue described above and has been corrected.

Update -- LAX1???? required port changes, this has been scheduled.

Update -- LAX1???? port changes have been delayed

Update -- Some servers moved from these to NYC servers are having issues, we'll create another branch of updates for that but they won't be posted here as they're not exactly fully related. This will cause some delays here though.

Update -- Due to the way our scripts work, it doesn't take into account this exact scenario, and some modifications had to be made, and some additional manual work had to be done to sort out some particular VM migrations.

Update -- Some VMs booting up are overloading LAX1????, this would have usually been powered off but we don't have backlogs of server offlne/online status from previous nodes, we apologize, and are working on identifying and powering these down.

Update -- Servers have been received in San Jose.

Update -- We are scheduling with our tech a time to go to San Jose

Update -- Please check semi-related network status page where all of San Jose is coincidentally down right as we receive the servers and trying to schedule setup for them.

Update -- There's been a delay for servers in San Jose moved in from Seattle, as the outage above is continuing.

Update -- LAX1??? has had the issues with SolusVM or whatever, LAX1??? has had the other issues describe above resolved as well.

Update -- Oh we noticed some of you may not actually know which of these nodes you got moved from and to for every particular service, let us spend the next 1000 words going over that.

Update -- NYCB027 has been moved off finally after some challenges, we are repurposing this node to bring on the rest of Seattle customers.

Update -- TPAZ005 has been moved off finally after some challenges, we are repurposing this node to bring on the rest of Seattle customers.

Update -- There has been a delay due to other issues.

Update -- LAX1??? through LAX1???? have been audited and some discrepancies on a few leftover VMs have been corrected.

Update -- We've been tracking some network issues here and working on that as well, but it may take some time as that's not the priority yet.

Update -- San Jose setup has been delayed as they only set the cabinet enough with enough cage nuts provided for about 13 servers, and we were too stupid to just order more cage nuts, their tech tells us that they definitely have no more cage nuts. We're also waiting on PDU change.

Update -- We are receiving reports of specific issues with certain VMs, we have not yet got to troubleshooting these particular issues but please remember these are loaded in from backups. Please wait until this is resolved to make tickets for any remaining issues.

Update -- We've locked off these nodes for now as we're receiving too many requests for further updates, because it turns out even though we've provided more updates here now, that's just made people not read through them and make even more tickets asking specific sub-updates for each update.

Update -- There's been another delay due to blah blah blah.

Update 10/13 -- We're working on moving the final two servers and fixing any remaining network issues.

P.S. I know that's not detailed enough for you and I've dealt with too much these last two weeks to perfectly remember everything, pretend I also described each sub-issue in detail.

@VirMach said: Hey I'm just following the instructions in the thread title. I can gauge when a serious response is necessary and this isn't one of those situations. The guy is just here to try to win a philosophical debate and anything I say won't change that.

Stop following the rules and be a rebel like the Yeti! Your stress levels will drop and the chicks will be all over you.

I think allow migrate vps from bad node to good node instead of the long waiting would be great help.

But it means virmach willl need more spare server.

@nightcat said:

I think allow migrate vps from bad node to good node instead of the long waiting would be great help.

But it means virmach willl need more spare server.

Agreed.

But it looks like since you can't open the product detail page, you shouldn't be able to call the migration function either, so you'll have to wait for DC to release the hostages, right?

Celebrating the full month of DALZ007 being offline!

@Virmach@FrankZ

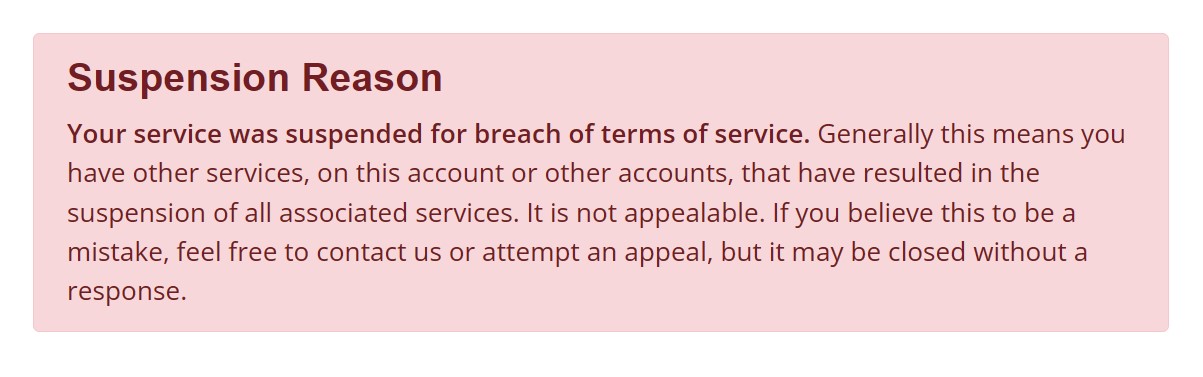

My machine was suspended, and the reason for the suspension was: "Generally this means you have other services, on this account or other accounts, that have resulted in the suspension of all associated services."

Please tell me specific multi-account information or even evidence. Rather than blithely saying that you have multiple accounts, so we will suspend your machine.

My machine url: https://billing.virmach.com/clientarea.php?action=productdetails&id=631784

@omission said: @Virmach@FrankZ

My machine was suspended, and the reason for the suspension was: "Generally this means you have other services, on this account or other accounts, that have resulted in the suspension of all associated services."

Please tell me specific multi-account information or even evidence. Rather than blithely saying that you have multiple accounts, so we will suspend your machine.

My machine url: https://billing.virmach.com/clientarea.php?action=productdetails&id=631784

Did you receive an account or service from another person (including a "friend")?

@omission said: @Virmach@FrankZ

My machine was suspended, and the reason for the suspension was: "Generally this means you have other services, on this account or other accounts, that have resulted in the suspension of all associated services."

Please tell me specific multi-account information or even evidence. Rather than blithely saying that you have multiple accounts, so we will suspend your machine.

My machine url: https://billing.virmach.com/clientarea.php?action=productdetails&id=631784

thank you for sharing. my faith in virmach is slowly getting restored

@omission said: @Virmach@FrankZ

My machine was suspended, and the reason for the suspension was: "Generally this means you have other services, on this account or other accounts, that have resulted in the suspension of all associated services."

Please tell me specific multi-account information or even evidence. Rather than blithely saying that you have multiple accounts, so we will suspend your machine.

My machine url: https://billing.virmach.com/clientarea.php?action=productdetails&id=631784

Did you receive an account or service from another person (including a "friend")?

No. I created this account myself and have never changed the account email address. This service is the only service I purchased after creating my account

I am doing backups every day. I can discard the data stored in the server and hope that Tokyo 038 can be restored quickly because my business is in Japan and I need its support.

@omission said: @Virmach@FrankZ

My machine was suspended, and the reason for the suspension was: "Generally this means you have other services, on this account or other accounts, that have resulted in the suspension of all associated services."

Please tell me specific multi-account information or even evidence. Rather than blithely saying that you have multiple accounts, so we will suspend your machine.

My machine url: https://billing.virmach.com/clientarea.php?action=productdetails&id=631784

Did you receive an account or service from another person (including a "friend")?

No. I created this account myself and have never changed the account email address. This service is the only service I purchased after creating my account

@omission said: @Virmach@FrankZ

My machine was suspended, and the reason for the suspension was: "Generally this means you have other services, on this account or other accounts, that have resulted in the suspension of all associated services."

Please tell me specific multi-account information or even evidence. Rather than blithely saying that you have multiple accounts, so we will suspend your machine.

My machine url: https://billing.virmach.com/clientarea.php?action=productdetails&id=631784

Did you receive an account or service from another person (including a "friend")?

No. I created this account myself and have never changed the account email address. This service is the only service I purchased after creating my account

trick answer begets a trick question:

how many accounts have you created so far

So far I have only created one account on this site. The suspended service is the only service I purchased and was not pushed by others.

So far I have only created one account on this site. The suspended service is the only service I purchased and was not pushed by others.

My advice to you, if you really are innocent, to just be calm.

Reply the ticket as clearly and honestly as possible.

And then wait patiently.

Unfortunately due to all the crisis things going on, it might take a bit longer than usual.

And if you are innocent, I realise this might be frustrating.

Usually Virmach is very good and accurate in identifying abuse and multi accounts/scalped accounts etc.

And he's also very lenient usually (doesn't act too quickly or too harsh).

Also he's usually open to listen and find solutions.

But for that, honesty is required.

So if you are in any way guilty, your best odds is to just be honest, confess, tell everything and then he'll probably try to work something out and find a solution.

If you truly are innocent, then it is bad luck for you and bad timing, and might take a while.

But then try to be patient and trust that it will be resolved fairly in the end.

@tetech said:

The SEA->LAX machines seem to have correct IP and node is unlocked.

My SEA VPS was also unlocked in the Billing System, but it still had the old IP

Reconfiguring the Network in both panels returned success messages, but after login via VNC the VPS still had the old IP again and again. (also the PW reset returned success and a new PW, but the old one remained working instead of the new one)

in the end i manually set the new IP via (Ubuntu 22.04)

nano /etc/network/interfaces

and then /etc/init.d/networking restart

now the VPS is connected again and works as expected.

@nightcat said:

I think allow migrate vps from bad node to good node instead of the long waiting would be great help.

But it means virmach willl need more spare server.

@nightcat said:

I think allow migrate vps from bad node to good node instead of the long waiting would be great help.

But it means virmach willl need more spare server.

Agreed.

But it looks like since you can't open the product detail page, you shouldn't be able to call the migration function either, so you'll have to wait for DC to release the hostages, right?

Celebrating the full month of DALZ007 being offline!

We're already working on this and I went over how the system would work but a the time people seemed mostly opposed to it, perhaps because I made it overcomplicated or for other reasons. But I think we can at least try to improve the page and have a barebones manual version of making this request. The issue is for manual version, we won't be able to do it for all plans, unless we start standardizing them.

Anyway @tulipyun you can now request this for DALZ007 if you want that.

@jtk said:

So what if I told you a year later, even though it was migrated to a new network, hardware and addressing, the VM on the old network was still running?

Now is a good time to better explain this cryptic message from June. I have, though not for much longer,a 2019 BF VM from Virmach that was originally in the Buffalo location. After the migration away from ColoCrossing, this like all VirMach VMs changed addresses a few times. This one just happened to be one of the "unlucky" to have been caught in the looonnng outage associated with NYCB027.

Anyway, somehow, some way, even though VirMach was migrating this VM around and the ColoCrossing relation was no more, at some point later I noticed the original VirMach VM at ColoCrossing lived on. Same system, creds, address, config, etc. I couldn't manage it outside of remotely logging into it, but it ran up until a few weeks ago. This wasn't the only VM I had on NYCB027, but only this one lived on at ColoCrossing that I saw. Reminds me of the urban legend of the server walled over and running for years after.

So while it was annoying to have VMs offline for over 4 months, it was a small win that the original clone was still running in no man's land for more than a year.

@Neoon said: Despite its unlocked state LAX1Z016 is still broken.

@DanSummer said:

Yeah, mine came back online yesterday (LAX1Z016), still not accessible from the outside world though.

I'm on the same node and my VPS works now. Log in via VNC and check if the new IP was assigned. I had to do it manually ("repair network" button had no effect) and since then it works flawless.

Comments

Mine all service restrored, waiting only on ATLZ007B

Which can be said without being a dick. I know because I did it.

Personally I do agree that it there might be a couple of less tickets submitted if VirMach dropped by here with a quick status update a little more often. Although I don't expect saying that you have nothing to add to the status page comments will do that much good. VirMach does seem update us when he has something tangible to say.

1st comment by AlSwearengen maybe was out of frustration, and received a sarcastic answer from VirMach, second comment by AlSwearengen was a bit of an escalation, so I stepped in before in got out of hand. That is the job as I see it.

I second thing that also influenced me was that AlSwearengen has never made a comment related to VirMach before, and has not stated he is a customer of VirMach's that is affected by any of these outages. If he is a VirMach customer then I can understand his frustration, but still can not condone the tone.

This is not to say that it would have been one sided if I had not said something. I am sure that VirMach is more than able to provide many snarky/sarcastic comments of his own, and might even enjoy doing so. But where would that get us besides a shit show, and a Rant thread.

whoosh

The issue isn't the frequency of the updates, it's the lack of progress and the lack of details. This isn't acceptable, AT ALL.

You picked the wrong providers if you can't get responses in less than a week. You need new leadership that can get shit done in a reasonable timeframe. This is mind boggling, really, your attitude with so many down customers. You're in the wrong industry.

Hey I'm just following the instructions in the thread title. I can gauge when a serious response is necessary and this isn't one of those situations. The guy is just here to try to win a philosophical debate and anything I say won't change that.

You're right we should have picked another provider and then got them to infiltrate Flexential datacenters in an operation to get the servers back since the servers are still within Flexential providers. Since we're in the wrong industry, using your logic it sounds like everyone picked the wrong provider (us.)

Even coming at this from any angle, let's say we're evil, in what situation would we just "forget" we have like tens of thousands of dollars of equipment with Flexential? We already said equipment is destined for a datacenter in Atlanta, so at the very least if we assume someone trusts any of our updates and that means even if we are going to nuke everyone's data afterward or don't plan on bringing services online, that'd happen once it's redeployed. We haven't even got to that point. I don't see any reason to provide anything falsely reassuring. I could keep repeating that we've contacted Flexential and they said everything is great, over and over. Then it'd just be unhelpful and also annoying. For all we know, Flexential does plan on just taking the equipment or whatever and it's fully out of our control in this scenario.

If you check back in the history of comments here and status in their locations of any changes at all, Flexential was pretty much useless before all of these issues. It's why I migrated services away from them a while back and why Virmach was trying to find alternate provider in the areas long before these issues.

So I have no doubt that they are completely useless at this point when they probably have a bunch of extra people contacting them and expecting them to actually do something.

While I know people are upset at their services not working in those spots, just imagine how much more upset Virmach is having massive amounts of hardware they've bought being in Flexential hands and not knowing if they will get them back on top of having angry customers demanding updates they simply don't have.

No one is happy in the situation, but can we try to at least be civil guys?

I have no idea what you're referring to. Your Seattle update said hardware was shipped to LA from Seattle (after the waste of time fail to get setup in Seattle) and just waiting on "migrations". For 11 days. It's apparently not hardware that can just get powered on and IPs assigned (as inferred before) but even data transfer would be done by now.

Edit: what philosophical debate would that be? That service providers should take downtime seriously? Or that customers expect better than week plus updates where nothing happened? I'm not clear what gaslighting me serves your purpose here.

Yes, everyone picked the wrong provider. But you seem to be helping users by having renewals come and go without usable servers and forced to go elsewhere regardless.

Over the eight years or so that I have been a VirMach customer I've been pretty happy with VirMach overall. I expect that the really good service I have had in the past, and their giveaway prices has prompted me to be a bit more calm about the current situation. That, and the fact that 19 of my 20 VMs are working just fine.

Although I am looking to replace a Dallas VM that was moved to NYC. Do you know of any non ColoCrossing provider where I might move to ?

The specs are:

If I don't find a similar deal in Dallas I probably will just wait for Virmach to open up OKC and move there.

Okay, sure, let's go with this since you seem personally interested and believe we didn't provide enough details or frequency in updates. I've recreated the status update for you as it would look if I had done it the way you desired, which I personally think would have been unhelpful. Keep in mind I've kind of ballparked the times here but all of these things did happen, and I may be forgetting a few extra things here and there which would have only made it more complicated. All this would do is give people little things they could pick apart, get confused over, or whatever else, and would not actually help customers figure out when something would be back up or pretty much anything else unless it were even more detailed.

Original:

Custom version, made just for you:

P.S. I know that's not detailed enough for you and I've dealt with too much these last two weeks to perfectly remember everything, pretend I also described each sub-issue in detail.

Stop following the rules and be a rebel like the Yeti! Your stress levels will drop and the chicks will be all over you.

Free Hosting at YetiNode | MicroNode | Cryptid Security | URL Shortener | LaunchVPS | ExtraVM | Host-C | In the Node, or Out of the Loop?

I think allow migrate vps from bad node to good node instead of the long waiting would be great help.

But it means virmach willl need more spare server.

was there a problem with

RYZE.LAX-A024.VMSnow ? my VM is not booting up it says offline in solusvm panelAgreed.

But it looks like since you can't open the product detail page, you shouldn't be able to call the migration function either, so you'll have to wait for DC to release the hostages, right?

Celebrating the full month of DALZ007 being offline!

The SEA->LAX machines seem to have correct IP and node is unlocked.

@Virmach @FrankZ

My machine was suspended, and the reason for the suspension was: "Generally this means you have other services, on this account or other accounts, that have resulted in the suspension of all associated services."

Please tell me specific multi-account information or even evidence. Rather than blithely saying that you have multiple accounts, so we will suspend your machine.

My machine url: https://billing.virmach.com/clientarea.php?action=productdetails&id=631784

Did you receive an account or service from another person (including a "friend")?

thank you for sharing. my faith in virmach is slowly getting restored

I bench YABS 24/7/365 unless it's a leap year.

No. I created this account myself and have never changed the account email address. This service is the only service I purchased after creating my account

I am doing backups every day. I can discard the data stored in the server and hope that Tokyo 038 can be restored quickly because my business is in Japan and I need its support.

trick answer begets a trick question:

how many accounts have you created so far

I bench YABS 24/7/365 unless it's a leap year.

So far I have only created one account on this site. The suspended service is the only service I purchased and was not pushed by others.

My advice to you, if you really are innocent, to just be calm.

Reply the ticket as clearly and honestly as possible.

And then wait patiently.

Unfortunately due to all the crisis things going on, it might take a bit longer than usual.

And if you are innocent, I realise this might be frustrating.

Usually Virmach is very good and accurate in identifying abuse and multi accounts/scalped accounts etc.

And he's also very lenient usually (doesn't act too quickly or too harsh).

Also he's usually open to listen and find solutions.

But for that, honesty is required.

So if you are in any way guilty, your best odds is to just be honest, confess, tell everything and then he'll probably try to work something out and find a solution.

If you truly are innocent, then it is bad luck for you and bad timing, and might take a while.

But then try to be patient and trust that it will be resolved fairly in the end.

Hope this helps.

My SEA VPS was also unlocked in the Billing System, but it still had the old IP

Reconfiguring the Network in both panels returned success messages, but after login via VNC the VPS still had the old IP again and again. (also the PW reset returned success and a new PW, but the old one remained working instead of the new one)

in the end i manually set the new IP via (Ubuntu 22.04)

nano /etc/network/interfacesand then

/etc/init.d/networking restartnow the VPS is connected again and works as expected.

We're already working on this and I went over how the system would work but a the time people seemed mostly opposed to it, perhaps because I made it overcomplicated or for other reasons. But I think we can at least try to improve the page and have a barebones manual version of making this request. The issue is for manual version, we won't be able to do it for all plans, unless we start standardizing them.

Anyway @tulipyun you can now request this for DALZ007 if you want that.

ATLZ009 has working IP address now, how am I meant to complain now?

yeah all servers online now (at least mine)

Today I found my virmach unlocked.

According to @VirMach it was locked due to tiket complains, so got locked.

Despite its unlocked state LAX1Z016 is still broken.

Here we go again, wooooooooooooooooooooooooooooooooooooooooooooooooo

Free NAT KVM | Free NAT LXC

Yeah, mine came back online yesterday (LAX1Z016), still not accessible from the outside world though.

Now is a good time to better explain this cryptic message from June. I have, though not for much longer,a 2019 BF VM from Virmach that was originally in the Buffalo location. After the migration away from ColoCrossing, this like all VirMach VMs changed addresses a few times. This one just happened to be one of the "unlucky" to have been caught in the looonnng outage associated with NYCB027.

Anyway, somehow, some way, even though VirMach was migrating this VM around and the ColoCrossing relation was no more, at some point later I noticed the original VirMach VM at ColoCrossing lived on. Same system, creds, address, config, etc. I couldn't manage it outside of remotely logging into it, but it ran up until a few weeks ago. This wasn't the only VM I had on NYCB027, but only this one lived on at ColoCrossing that I saw. Reminds me of the urban legend of the server walled over and running for years after.

So while it was annoying to have VMs offline for over 4 months, it was a small win that the original clone was still running in no man's land for more than a year.

Dataplane.org's current server hosting provider list

I'm on the same node and my VPS works now. Log in via VNC and check if the new IP was assigned. I had to do it manually ("repair network" button had no effect) and since then it works flawless.