HPE server, logical drives failing as soon as created.

Hello,

I have a ProLiant DL325 Gen10 giving me issues with the RAID card.

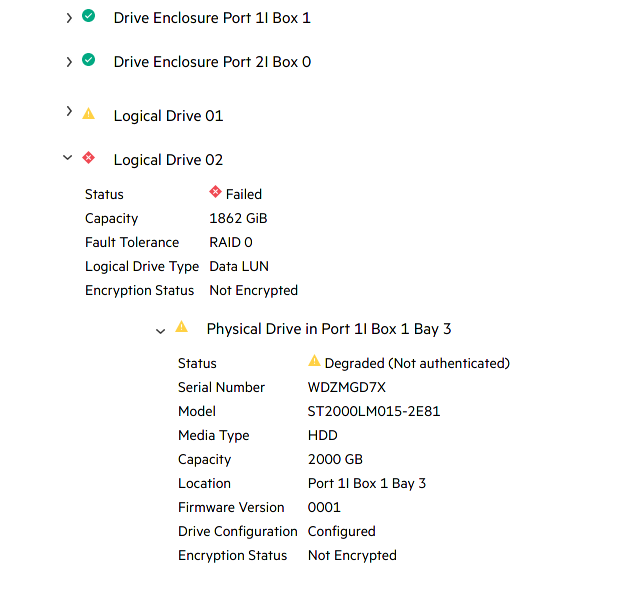

Basically since this controller doesn't support JBOD i created one array for each bay, but after some times the server goes into degraded mode and all logical drives are marked as failed. Physical drives are still ok (just "degraded not authenticated").

I have no idea why this happens, but i have to say that these drives were used in another raid system in the past, so they still could have some headers inside. Is there something i can do to tell the system "i don't care about this data, just start over" and see if the issue is actually caused by the drives or what?

Comments

Those look like 5400 RPM laptop drives? RAID controllers have various sensitivities but generally they want TLER enabled and spin down disabled. If your disks are spinning down the array will possibly mark them as failed if they can’t spin up fast enough. I’ve found HP raid controllers seem more sensitive than say Dell PERC in the past.

We had a customer run laptop drives in a dedicated HP DL360 and had to convert the raid controller to IT mode for it to stop dropping the disks from the array.

You should be able to get logs from the controller to confirm this is the cause - google the controller model and “how to access logs”. The HP utilities in Windows or Linux should provide them.

Ionswitch.com | High Performance VPS in Seattle and Dallas since 2018

This makes sense. This controller here is "HPE Smart Array P408i-a SR Gen10". I couldn't find anything about IT mode. Is there some alt firmware i can flash on it or i might just buy another raid card and put it inside?

In the past, all HPE controllers (and Dell and others) where in fact LSI controllers, so you can try to google your way through HPE docs or use LSI utilities to detect what's the real part number is. You can then download LSI firmware.

Warning! Flashing can brick controller, do your research!

I would say it's unlikely and @ionswitch_stan's version is better, but you can use dd to destroy all data:

# dd if=/dev/zero of=/dev/sdX bs=1Mor

# dd if=/dev/zero of=/dev/sdX bs=1M count=100to destroy first 100MB

Hello, I'm back. Apparently @ionswitch_stan was spot on with what the problem was.

I've just disconnected the two SAS cables from the backbone to the RAID controller, and connected them directly to the integrated SATA controller of the motherboard, and this solved every issue i had with it. I lost RAID of course, but i don't care since I was planning to use them as JBOD and the controller didn't have that support anyway.

Even the drive leds are working correctly now.

Thanks!!