You're one of those two. One keeps running out of memory (inside the VM) and crashing. The other one was only ram out of memory once recently (last couple days.)

So (probably) your answer: yes, it's going to be a weekly thing unless you reduce your usage or consider in the future ordering a larger service to fit your needs. I do see right now you're only using about (super rough estimate) 393MB so if that's the normal usage you should technically be fine but maybe what you're running has a memory leak or it gets more cluttered/heavier use over time.

So, uh I'm not a benchmark guy but is running yabs once supposed to take my SJCZ005B offline before completion?

Did it by any chance crash on the Geekbench part? I don't know if the code has a preventative measure where it doesn't continue if you don't meet the requirements but it did definitely crash because of out of memory error (most recently.) Some other people reported an issue with Debian 11 which I've been looking into so it could potentially be related if you're not doing anything crazy in there but it seems like it was mostly regarding the disk.

You made it farther than they did though and they were unable to reboot.

But, for example, I never had a blue button to Redeploy in New Region. Anyway, it seems fine with Ubuntu 20 (still functional after 30 minutes after reinstallation). With Debian 11 was nonfunctional after few minutes. Always. Both services.

Seems like there's a bug in the Request IP feature... I have requested to Migrate instead of Discount if no IP available, but then the ticket created is Discount instead

The API request is something like this: https://billing.virmach.com/clientarea.php?action=productdetails&id=X&ipcount=1&iprequired=1&ipchangetype=migrate

But in the actual ticket created it is:

I would like to request additional IPs and/or a discount on my service. I understand that if any discrepancy is found I will forfeit any additional IPv4 addresses on my service.

# of IPs I am missing: 1

# of IPs I need: 1

If there are not enough IPs I would like to: Discount

What happened...

食之无味 弃之可惜 - Too arduous to relish, too wasteful to discard.

@FAT32 said:

Seems like there's a bug in the Request IP feature... I have requested to Migrate instead of Discount if no IP available, but then the ticket created is Discount instead

The API request is something like this: https://billing.virmach.com/clientarea.php?action=productdetails&id=X&ipcount=1&iprequired=1&ipchangetype=migrate

But in the actual ticket created it is:

I would like to request additional IPs and/or a discount on my service. I understand that if any discrepancy is found I will forfeit any additional IPv4 addresses on my service.

# of IPs I am missing: 1

# of IPs I need: 1

If there are not enough IPs I would like to: Discount

What happened...

Confirmed, asking for it to be fixed and to take a look at older requests and ask if people would like to make a change.

@VirMach said:

Confirmed, asking for it to be fixed and to take a look at older requests and ask if people would like to make a change.

Thanks, I am thinking the most straightforward / easy way is to just cancel all the pending "Request IP" tickets and ask everyone to submit again so that you can automate the process easily

食之无味 弃之可惜 - Too arduous to relish, too wasteful to discard.

@Jab said:

People with broken Debian 11 what node you running? Full name rather than just location.

Not certain location matters - I had failures (with Debian 11) in Frankfurt FFME002 (was force migrated from AMSKVM8)

Amsterdam AMSD026 and latest AMSD028

As I said before just needs Ryzen Debian 11 (from template)

apt-get update

apt-get upgrade

reboot

left with no root device /dev/vda1

Managed to get latest free (thanks again) server working by installing proxmox onto Debian 11, this uses its own Kernel and then everything seems to work smoothly.

@msatt said: Not certain location matters - I had failures (with Debian 11) in Frankfurt FFME002 (was force migrated from AMSKVM8)

Amsterdam AMSD026 and latest AMSD028

Yeah, now to fun part [yes, Debian 11 from SolusVM template]:

I have VPS at FFME002 (tested yesterday) - works.

I have VPS at AMSD028 (just rebooted) - works.

Random questions:

Did you reinstall template via WHMCS or via SolusVM? Should be the same, but maybe it's not?

Do you Power off via SolusVM before clicking reinstall template?

Does your cat /proc/cpuinfo shows actual CPU model (aka Passthrough CPU enabled)?

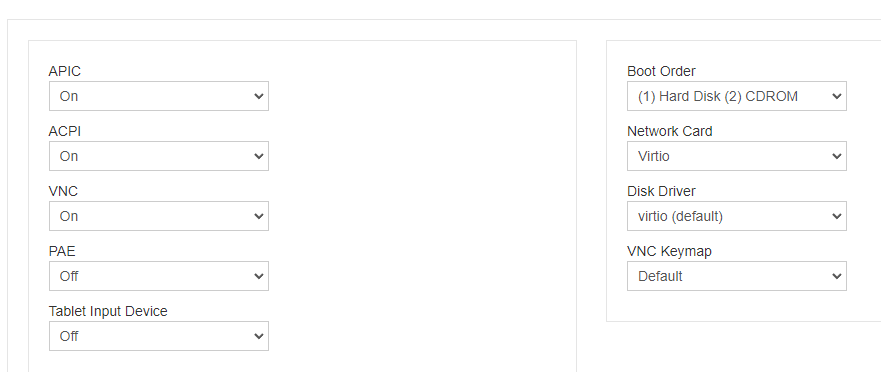

Did you change anything (on both machines?) in SolusVM in Settings panel?!

What product type/service this is? Like mine on AMSD028 is BF-SPECIAL-OVZ and the FFME002 is NVMe2G VPS - probably shouldn't matter, but you said you can broke it every time so it matters?

Are those some crazy one from BF sales with idk crazy specs - 384MB of RAM or like 5GB disk?

Can you throw product/serviceID from link clientarea.php?action=productdetails&id=XXX) on one of those broken Debian 11 servers? Maybe it's it was deployed with some different settings and simple diff of those will allow VirMach to track wtf. Mine working are 255227 and 675531. I know VirMach loves IPs more, but we all hate publicly posting IPs :P

@gritty said: this boss has a lot of time to speak his mind at LES, but he didn't have any time to deal with the piles of tickets

If you provided the same kind of information in ticket you provided here (so almost nothing - screenshot of panel without IP/user account/serviceID/nodename... anything that would allow VirMach to find you?) and then when he actually engaged with you and asked if that was AFTER Ryzen Migrate you ignored him and did not reply to this... what do you really expect here and why you are shocked?

Did you reinstall template via WHMCS or via SolusVM? Should be the same, but maybe it's not? - Used SolusVM as Deb11 N/A in WHCMS

Do you Power off via SolusVM before clicking reinstall template? - No (was not aware I needed to)

Does your cat /proc/cpuinfo shows actual CPU model (aka Passthrough CPU enabled)? Passthrough shows up ok in YABS (before reboot)

Did you change anything (on both machines?) in SolusVM in Settings panel?! - No changes

My servers are working now, and don't really wish to destroy them as building, although automated has taken sometime.

Last failure was on the freebie NVMe2G VPS (AMSD028) id=675535

Hopefully from the above this will help @VirMach as I know things are so quiet

*edit to add location of freebie and id as requested

No power-off pressed. After updating, I reboot from terminal and remains Offline. So I need to reboot from SolusVM to appear online, but it's nonfunctional. Even if I will leave as it is installed, it freezes during panel installations or if the installation is successful after few minutes (the panel can be accessed for few minutes, the domain can show up). After few minutes - boom.

cat /proc/cpuinfo - model name : AMD Ryzen 9 3950X 16-Core Processor

no changes in SolusVM.

For example, I did right now a new Debian 11 installation on VPS 2 (with 2 CPU), and after update I rebooted and now is inoperable. I cannot connect through SSH anymore. It mush be reinstalled again and again and again... Or just choose another OS (Ubuntu is stable on VPS 1).

@dartagnan said: I reboot from terminal and remains Offline.

I don't have the same nodes as you (kinda, later on that ), but all I do different is powering off server (+ waiting 3 minutes) before reinstalling template - VirMach kinda mentioned this on OGF that something is funnky and power off is the best bet. Maybe it's this? Do you have time to test? Go to SolusVM, click Power off (not shutdown), wait 3 minutes, click VNC (should complain server is offline), reinstall Debian 11 from template (first install takes long and seems like there is some reboot in middle, so don't pay attention to VNC), wait like 10 minutes, login, apt-get update, apt-get upgrade, reboot.

--

@dartagnan said: Node in WHMCS = TPAZ002

Node in SolusVM = TPAZ005.VIRM.AC

@VirMach you drunk I have thing the other way around. I guess you need to rename one of those ^.-

(WHMCS) Node Name TPAZ005

(SolusVM) Node TPAZ002.VIRM.AC

@Jab said:

Do you have time to test? Go to SolusVM, click Power off (not shutdown), wait 3 minutes, click VNC (should complain server is offline), reinstall Debian 11 from template (first install takes long and seems like there is some reboot in middle, so don't pay attention to VNC), wait like 10 minutes, login, apt-get update, apt-get upgrade, reboot.

Power-Off for 4 mins

Click VNC - it shows me the port, the password... Sorry, I have never used VNC. I don't know to used it. Anyway, the VPS is offline.

Reinstall Debian 11 from template. Wait 11 mins (it's online in SolusVM).

Login, update, upgrade aaaaaand reboooooot (from terminal).

Wait 3 mins and... Try to login... Nope. Unable.

ssh: connect to host hereismyip port 22: Connection timed out

@Jab said:

Do you have time to test? Go to SolusVM, click Power off (not shutdown), wait 3 minutes, click VNC (should complain server is offline), reinstall Debian 11 from template (first install takes long and seems like there is some reboot in middle, so don't pay attention to VNC), wait like 10 minutes, login, apt-get update, apt-get upgrade, reboot.

Power-Off for 4 mins

Click VNC - it shows me the port, the password... Sorry, I have never used VNC. I don't know to used it. Anyway, the VPS is offline.

Reinstall Debian 11 from template. Wait 11 mins (it's online in SolusVM).

Login, update, upgrade aaaaaand reboooooot (from terminal).

Wait 3 mins and... Try to login... Nope. Unable.

ssh: connect to host hereismyip port 22: Connection timed out

With Status Online in SolusVM after reboot.

When in this state, use VNC in whcms - it will open a terminal type screen and you can confirm what is happening. Most likely it will be an error associated with being unable to mount /root on /dev/vda1

@Jab said:

Do you have time to test? Go to SolusVM, click Power off (not shutdown), wait 3 minutes, click VNC (should complain server is offline), reinstall Debian 11 from template (first install takes long and seems like there is some reboot in middle, so don't pay attention to VNC), wait like 10 minutes, login, apt-get update, apt-get upgrade, reboot.

Power-Off for 4 mins

Click VNC - it shows me the port, the password... Sorry, I have never used VNC. I don't know to used it. Anyway, the VPS is offline.

Reinstall Debian 11 from template. Wait 11 mins (it's online in SolusVM).

Login, update, upgrade aaaaaand reboooooot (from terminal).

Wait 3 mins and... Try to login... Nope. Unable.

ssh: connect to host hereismyip port 22: Connection timed out

With Status Online in SolusVM after reboot.

Need some info that may be useful in me recreating it. Do you have AES? How much RAM and disk?

(edit) I see you've already provided it, thanks. I was reading the thread backwards

Okay so I've located the issue, well at least what I've read makes sense. The SolusVM default Debian 11 template seems to have been created for "generation 2" which means it uses virt-resize.

It looks like possibly even Linode had a problem with this a little while ago so I'm guessing what's happening since you guys mentioned it happening after an update, is that when SolusVM generated the template, the issue wasn't present and that's probably why I wasn't able to reproduce it speifically. I was going to do the things I said then got stuck doing something else. Anyway, it looks like Linode fixed it by being a big company and literally maintaining their own kernel.

I'm sure I can find a fix after poking around for a while but instead of getting everyone to do it over and over and over, at this point I just need to see if I can get it working and then template it and replace.

Anyway that's my 5 minute analysis on the matter but I'll try my very bestest to address this today.

^ Does same thing happen if Debian 11 is installed via netboot.xyz? Crap, what am I saying? Folks would have to configure stuff for themselves=silly me.

Just a thought.

If you must use a template: install Debian 10 and do a simple dist upgrade.

@VirMach said: I'll try my very bestest to address this today.

Surely there's higher priorities.

It wisnae me! A big boy done it and ran away.

NVMe2G for life! until death (the end is nigh)

@willie said:

In principle, it shouldn't be possible to crash a whole VM just because some application runs out of memory. I wonder what is going on.

I've touched on this in the past but the "short" (not really) version is that it's a feature, not a bug. It doesn't necessarily mean it actually crashed and when I said he should probably see what's going on in his VM, but it would have crashed if it wasn't a VM. Therefore it could essentially be a simulated crash. I didn't specifically mean that something's fully broken in that it's causing the VM to crash in the same way a computer might when physical memory is involved, but I can see how I could have said it differently. What I meant was that it's saying that he went over his limit.

The whole system or whatever you want to call it uses heuristics to make an educated guess based on the weird way it all works, and it's baked into the Linux kernel to deal with virtual memory versus physical memory and avoid a situation where theoretically let's say 50 different 1GB VMs actually end up getting some sort of default "authorization" (I know I'm butchering this when it comes to using the actual proper words) to use more than than 1GB and then it runs out of physical memory and is potentially toast. My understanding is that there's no way for it to 100% know and therefore they had to build it this way, due to how virtual memory inherently works.

Now, there's two situations where this could happen and maybe there's more but again I'm speaking within the realms of our setup. One, the server is actually out of memory and it's doing it to protect the server. That doesn't really apply here because this server has plenty of RAM left over. If this were the case, it'd most likely pick a process that's using more memory, not a 512-768MB VPS but again it's based on the heuristics so there's no way to know for sure but hey if you trust them with the kernel then that means you trust them with this as well. If it was this scenario there'd be a lot more pitchforks and most likely from 4GB, 8GB plan customers.

The second situation is that it's just preventing that process from using more than what it's allowed to use. So if there's a spike it steps in. It's theoretically possible to disable this to some degree but then that opens the door for abuse and server crashes. It's again theoretically possible it could have made a bad decision but I highly doubt it given the circumstances.

Pretty much every time it's been looked into thoroughly, it's been correct once I perform exactly what the customer describes as doing when it happened.

I could have butchered that explanation but I wanted to try to be thorough. I'm sure someone way smarter than me can explain it more precisely, with details, correct me on technicalities, and so on but hey I never claimed to be a kernel expert. And yes, theoretically this could all be avoided if we disabled any overcommitting but again from my understanding that would actually mean more crashes because AFAIK programs can still request more memory and within each VM we'd also have to go in and disable it somehow (this part I'm speculation, could be incorrect as I've never seriously considered doing it), and it would also mean we'd just have to give people less RAM for the same cost, just so most people would basically be locked in further and not be able to benefit from the perks of virtualization. This of it like locking everyone to their actual share of CPU: everyone would not be able to ever burst and that means wasted processing power when some need it and it's not even being used by anyone else. Maybe for something like disk space it makes sense but not for CPU or RAM.

@AlwaysSkint said:

^ Does same thing happen if Debian 11 is installed via netboot.xyz? Crap, what am I saying? Folks would have to configure stuff for themselves=silly me.

Just a thought.

If you must use a template: install Debian 10 and do a simple dist upgrade.

@VirMach said: I'll try my very bestest to address this today.

Surely there's higher priorities.

When you do Debian 10 --> Debian 11 does the kernel version change to the same exact version which has the problem from Debian 11 --> Debian 11 update?

AKA you should upgrade to latest kernel as well and break your VM, for science (edit: okay fine not really.)

^ Will try to find time to 'play' on one of the testbed ones, tomorrow.

In other news: I've just wiped a 2019 BF Special (you know, the 3 IP one) with the Alama Ryzen template, purely to enable pass-through. Then it'll get a proper reinstall using netboot.xyz.

It wisnae me! A big boy done it and ran away.

NVMe2G for life! until death (the end is nigh)

@AlwaysSkint said:

^ Does same thing happen if Debian 11 is installed via netboot.xyz? Crap, what am I saying? Folks would have to configure stuff for themselves=silly me.

My NVMe2G is on PHXZ003.

I installed Debian 11 via netboot.xyz ISO.

After installation, the first boot shows: No bootable device.

The second boot succeeds.

Comments

@VirMach May I ask if have any updates on Tokyo Storage VPS? already 6+ months, still pending, thanks.

Found SJCZ005B VPS powered down again this morning (was able to boot it, though). Is this going to be a weekly/bi-weekly thing?

You're one of those two. One keeps running out of memory (inside the VM) and crashing. The other one was only ram out of memory once recently (last couple days.)

So (probably) your answer: yes, it's going to be a weekly thing unless you reduce your usage or consider in the future ordering a larger service to fit your needs. I do see right now you're only using about (super rough estimate) 393MB so if that's the normal usage you should technically be fine but maybe what you're running has a memory leak or it gets more cluttered/heavier use over time.

Did it by any chance crash on the Geekbench part? I don't know if the code has a preventative measure where it doesn't continue if you don't meet the requirements but it did definitely crash because of out of memory error (most recently.) Some other people reported an issue with Debian 11 which I've been looking into so it could potentially be related if you're not doing anything crazy in there but it seems like it was mostly regarding the disk.

You made it farther than they did though and they were unable to reboot.

People with broken Debian 11 what node you running? Full name rather than just location.

Haven't bought a single service in VirMach Great Ryzen 2022 - 2023 Flash Sale.

https://lowendspirit.com/uploads/editor/gi/ippw0lcmqowk.png

Seems like there's a bug in the Request IP feature... I have requested to Migrate instead of Discount if no IP available, but then the ticket created is Discount instead

The API request is something like this:

https://billing.virmach.com/clientarea.php?action=productdetails&id=X&ipcount=1&iprequired=1&ipchangetype=migrateBut in the actual ticket created it is:

What happened...

食之无味 弃之可惜 - Too arduous to relish, too wasteful to discard.

Confirmed, asking for it to be fixed and to take a look at older requests and ask if people would like to make a change.

Thanks, I am thinking the most straightforward / easy way is to just cancel all the pending "Request IP" tickets and ask everyone to submit again so that you can automate the process easily

食之无味 弃之可惜 - Too arduous to relish, too wasteful to discard.

In principle, it shouldn't be possible to crash a whole VM just because some application runs out of memory. I wonder what is going on.

Not certain location matters - I had failures (with Debian 11) in Frankfurt FFME002 (was force migrated from AMSKVM8)

Amsterdam AMSD026 and latest AMSD028

As I said before just needs Ryzen Debian 11 (from template)

apt-get update

apt-get upgrade

reboot

left with no root device /dev/vda1

Managed to get latest free (thanks again) server working by installing proxmox onto Debian 11, this uses its own Kernel and then everything seems to work smoothly.

HTH

Yeah, now to fun part [yes, Debian 11 from SolusVM template]:

I have VPS at FFME002 (tested yesterday) - works.

I have VPS at AMSD028 (just rebooted) - works.

Random questions:

Did you reinstall template via WHMCS or via SolusVM? Should be the same, but maybe it's not?

Do you

Power offvia SolusVM before clicking reinstall template?Does your

cat /proc/cpuinfoshows actual CPU model (aka Passthrough CPU enabled)?Did you change anything (on both machines?) in SolusVM in Settings panel?!

What product type/service this is? Like mine on AMSD028 is

BF-SPECIAL-OVZand the FFME002 isNVMe2G VPS- probably shouldn't matter, but you said you can broke it every time so it matters?Are those some crazy one from BF sales with idk crazy specs - 384MB of RAM or like 5GB disk?

Can you throw product/serviceID from link clientarea.php?action=productdetails&id=XXX) on one of those broken Debian 11 servers? Maybe it's it was deployed with some different settings and simple diff of those will allow VirMach to track wtf. Mine working are

255227and675531. I know VirMach loves IPs more, but we all hate publicly posting IPs :PHaven't bought a single service in VirMach Great Ryzen 2022 - 2023 Flash Sale.

https://lowendspirit.com/uploads/editor/gi/ippw0lcmqowk.png

this boss has a lot of time to express his mind at LES, but he didn't have any time to deal with the piles of tickets

If you provided the same kind of information in ticket you provided here (so almost nothing - screenshot of panel without IP/user account/serviceID/nodename... anything that would allow VirMach to find you?) and then when he actually engaged with you and asked if that was AFTER Ryzen Migrate you ignored him and did not reply to this... what do you really expect here and why you are shocked?

Haven't bought a single service in VirMach Great Ryzen 2022 - 2023 Flash Sale.

https://lowendspirit.com/uploads/editor/gi/ippw0lcmqowk.png

Did you reinstall template via WHMCS or via SolusVM? Should be the same, but maybe it's not? - Used SolusVM as Deb11 N/A in WHCMS

Do you Power off via SolusVM before clicking reinstall template? - No (was not aware I needed to)

Does your cat /proc/cpuinfo shows actual CPU model (aka Passthrough CPU enabled)? Passthrough shows up ok in YABS (before reboot)

Did you change anything (on both machines?) in SolusVM in Settings panel?! - No changes

My servers are working now, and don't really wish to destroy them as building, although automated has taken sometime.

Last failure was on the freebie NVMe2G VPS (AMSD028) id=675535

Hopefully from the above this will help @VirMach as I know things are so quiet

*edit to add location of freebie and id as requested

Can we save it? JP-STORAGE-500G !!!!!

Can you answer the user's question

@VirMach @VirMach @VirMach

May I ask if have any updates on Tokyo Storage VPS? already 6+ months, still pending, thanks.

@xiaobindiy @xiaobindiy @xiaobindiy @xiaobindiy @xiaobindiy @xiaobindiy @xiaobindiy @xiaobindiy @xiaobindiy @xiaobindiy @xiaobindiy @xiaobindiy @xiaobindiy @xiaobindiy @xiaobindiy @xiaobindiy @xiaobindiy @xiaobindiy @xiaobindiy @xiaobindiy

Stop being annoying.

1) BF-SPECIAL-2021 - BUFFALO, NY / 1 vCPU / 1920M RAM / 25G SSD / 3500G BW / 1 IPs - id=630940

Node in WHMCS = NYCB040

Node in SolusVM = RYZE.NYC-B040.VMS

2) BF-SPECIAL-2021 - BUFFALO, NY / 2 vCPU / 2688M RAM / 40G SSD / 5000G BW / 1 IPs - id=631147

Node in WHMCS = TPAZ002

Node in SolusVM = TPAZ005.VIRM.AC

For example, I did right now a new Debian 11 installation on VPS 2 (with 2 CPU), and after update I rebooted and now is inoperable. I cannot connect through SSH anymore. It mush be reinstalled again and again and again... Or just choose another OS (Ubuntu is stable on VPS 1).

I don't have the same nodes as you (kinda, later on that ), but all I do different is powering off server (+ waiting 3 minutes) before reinstalling template - VirMach kinda mentioned this on OGF that something is funnky and power off is the best bet. Maybe it's this? Do you have time to test? Go to SolusVM, click Power off (not shutdown), wait 3 minutes, click VNC (should complain server is offline), reinstall Debian 11 from template (first install takes long and seems like there is some reboot in middle, so don't pay attention to VNC), wait like 10 minutes, login, apt-get update, apt-get upgrade, reboot.

), but all I do different is powering off server (+ waiting 3 minutes) before reinstalling template - VirMach kinda mentioned this on OGF that something is funnky and power off is the best bet. Maybe it's this? Do you have time to test? Go to SolusVM, click Power off (not shutdown), wait 3 minutes, click VNC (should complain server is offline), reinstall Debian 11 from template (first install takes long and seems like there is some reboot in middle, so don't pay attention to VNC), wait like 10 minutes, login, apt-get update, apt-get upgrade, reboot.

--

@VirMach you drunk I have thing the other way around. I guess you need to rename one of those ^.-

Haven't bought a single service in VirMach Great Ryzen 2022 - 2023 Flash Sale.

https://lowendspirit.com/uploads/editor/gi/ippw0lcmqowk.png

Power-Off for 4 mins

Click VNC - it shows me the port, the password... Sorry, I have never used VNC. I don't know to used it. Anyway, the VPS is offline.

Reinstall Debian 11 from template. Wait 11 mins (it's online in SolusVM).

Login, update, upgrade aaaaaand reboooooot (from terminal).

Wait 3 mins and... Try to login... Nope. Unable.

ssh: connect to host hereismyip port 22: Connection timed out

With Status Online in SolusVM after reboot.

You fucking cursed!

Haven't bought a single service in VirMach Great Ryzen 2022 - 2023 Flash Sale.

https://lowendspirit.com/uploads/editor/gi/ippw0lcmqowk.png

When in this state, use VNC in whcms - it will open a terminal type screen and you can confirm what is happening. Most likely it will be an error associated with being unable to mount /root on /dev/vda1

Need some info that may be useful in me recreating it. Do you have AES? How much RAM and disk?

(edit) I see you've already provided it, thanks. I was reading the thread backwards

Okay so I've located the issue, well at least what I've read makes sense. The SolusVM default Debian 11 template seems to have been created for "generation 2" which means it uses virt-resize.

It looks like possibly even Linode had a problem with this a little while ago so I'm guessing what's happening since you guys mentioned it happening after an update, is that when SolusVM generated the template, the issue wasn't present and that's probably why I wasn't able to reproduce it speifically. I was going to do the things I said then got stuck doing something else. Anyway, it looks like Linode fixed it by being a big company and literally maintaining their own kernel.

I'm sure I can find a fix after poking around for a while but instead of getting everyone to do it over and over and over, at this point I just need to see if I can get it working and then template it and replace.

Anyway that's my 5 minute analysis on the matter but I'll try my very bestest to address this today.

^ Does same thing happen if Debian 11 is installed via netboot.xyz? Crap, what am I saying? Folks would have to configure stuff for themselves=silly me.

Just a thought.

If you must use a template: install Debian 10 and do a simple dist upgrade.

Surely there's higher priorities.

It wisnae me! A big boy done it and ran away.

NVMe2G for life! until death (the end is nigh)

I've touched on this in the past but the "short" (not really) version is that it's a feature, not a bug. It doesn't necessarily mean it actually crashed and when I said he should probably see what's going on in his VM, but it would have crashed if it wasn't a VM. Therefore it could essentially be a simulated crash. I didn't specifically mean that something's fully broken in that it's causing the VM to crash in the same way a computer might when physical memory is involved, but I can see how I could have said it differently. What I meant was that it's saying that he went over his limit.

The whole system or whatever you want to call it uses heuristics to make an educated guess based on the weird way it all works, and it's baked into the Linux kernel to deal with virtual memory versus physical memory and avoid a situation where theoretically let's say 50 different 1GB VMs actually end up getting some sort of default "authorization" (I know I'm butchering this when it comes to using the actual proper words) to use more than than 1GB and then it runs out of physical memory and is potentially toast. My understanding is that there's no way for it to 100% know and therefore they had to build it this way, due to how virtual memory inherently works.

Now, there's two situations where this could happen and maybe there's more but again I'm speaking within the realms of our setup. One, the server is actually out of memory and it's doing it to protect the server. That doesn't really apply here because this server has plenty of RAM left over. If this were the case, it'd most likely pick a process that's using more memory, not a 512-768MB VPS but again it's based on the heuristics so there's no way to know for sure but hey if you trust them with the kernel then that means you trust them with this as well. If it was this scenario there'd be a lot more pitchforks and most likely from 4GB, 8GB plan customers.

The second situation is that it's just preventing that process from using more than what it's allowed to use. So if there's a spike it steps in. It's theoretically possible to disable this to some degree but then that opens the door for abuse and server crashes. It's again theoretically possible it could have made a bad decision but I highly doubt it given the circumstances.

Pretty much every time it's been looked into thoroughly, it's been correct once I perform exactly what the customer describes as doing when it happened.

I could have butchered that explanation but I wanted to try to be thorough. I'm sure someone way smarter than me can explain it more precisely, with details, correct me on technicalities, and so on but hey I never claimed to be a kernel expert. And yes, theoretically this could all be avoided if we disabled any overcommitting but again from my understanding that would actually mean more crashes because AFAIK programs can still request more memory and within each VM we'd also have to go in and disable it somehow (this part I'm speculation, could be incorrect as I've never seriously considered doing it), and it would also mean we'd just have to give people less RAM for the same cost, just so most people would basically be locked in further and not be able to benefit from the perks of virtualization. This of it like locking everyone to their actual share of CPU: everyone would not be able to ever burst and that means wasted processing power when some need it and it's not even being used by anyone else. Maybe for something like disk space it makes sense but not for CPU or RAM.

When you do Debian 10 --> Debian 11 does the kernel version change to the same exact version which has the problem from Debian 11 --> Debian 11 update?

AKA you should upgrade to latest kernel as well and break your VM, for science (edit: okay fine not really.)

^ Will try to find time to 'play' on one of the testbed ones, tomorrow.

In other news: I've just wiped a 2019 BF Special (you know, the 3 IP one) with the Alama Ryzen template, purely to enable pass-through. Then it'll get a proper reinstall using netboot.xyz.

It wisnae me! A big boy done it and ran away.

NVMe2G for life! until death (the end is nigh)

YABS of that free NVME2G box:

Really powerful VM, might just let it live an easy life and only host a few static sites on it. Thanks @VirMach !

@VirMach the buttons in the billing panel look very good now.

Although there are no locations available for paid migrations in any of my VMs.

Will this feature be available again in the future ?

My NVMe2G is on PHXZ003.

I installed Debian 11 via netboot.xyz ISO.

After installation, the first boot shows: No bootable device.

The second boot succeeds.

No hostname left!